NVIDIA GH200 AI training AI inference and ultra-large-scale language model trainingnode computer Arm Grace CPU Server

Comparison with Previous Generations

| Feature | NVIDIA GH200 | NVIDIA A100 | NVIDIA H100 |

|---|---|---|---|

| Architecture | Grace Hopper (ARM + Hopper GPU) | Ampere | Hopper |

| Memory | Up to 576GB LPDDR5X + HBM3 | 80GB HBM2e | 80GB HBM3 |

| Memory Bandwidth | 1.2TB/s | 2TB/s | 3TB/s |

| AI Performance | 40X faster inference (vs. previous-gen) | Strong AI performance | Leading AI acceleration |

| Power Efficiency | 2X better efficiency | Standard | Optimized for AI |

| Interconnect | NVLink-C2C (900GB/s) | PCIe 4.0 | NVLink 4.0 |

| Target Use | HPC, LLMs, AI, Cloud Computing | HPC, AI workloads | Enterprise AI, supercomputing |

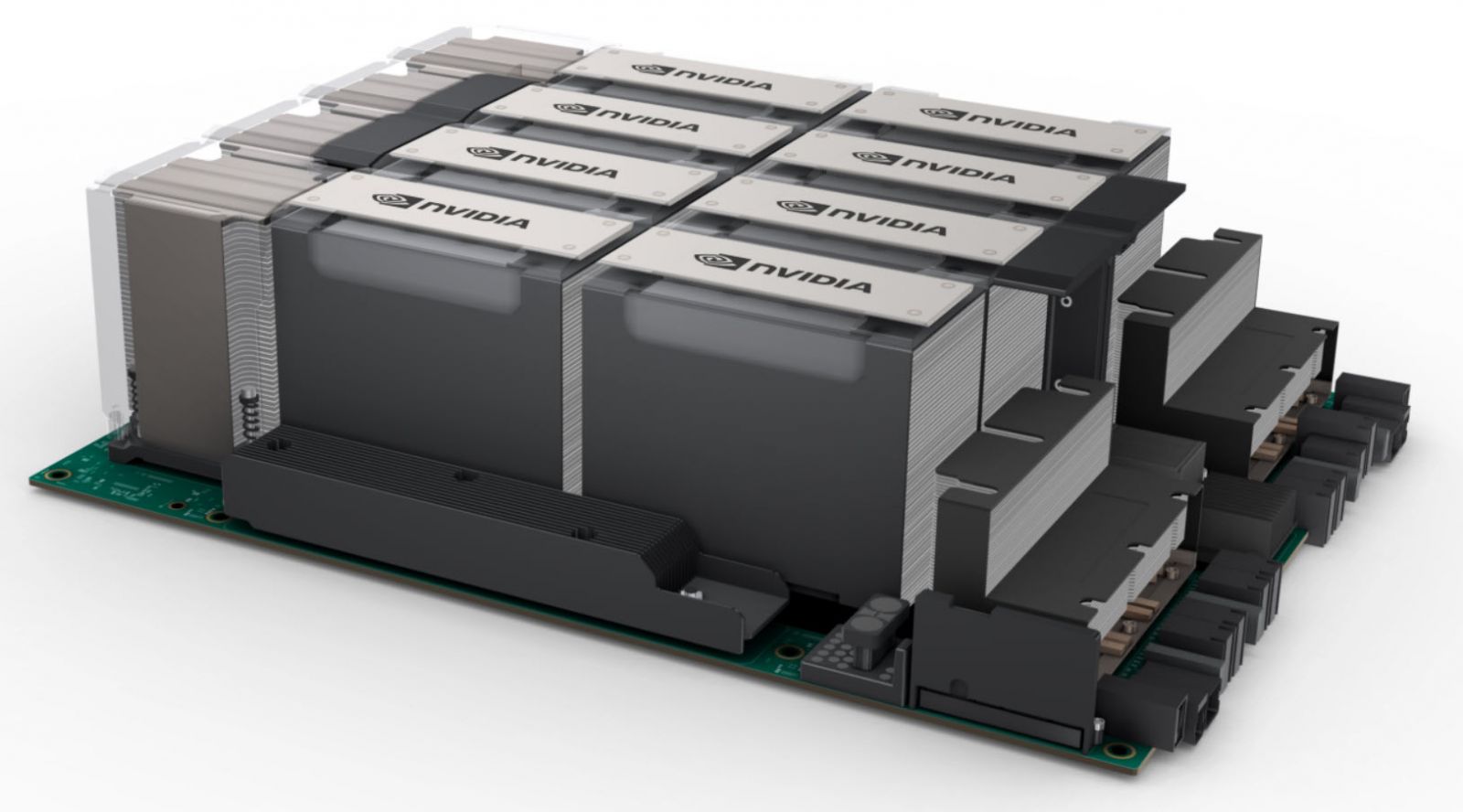

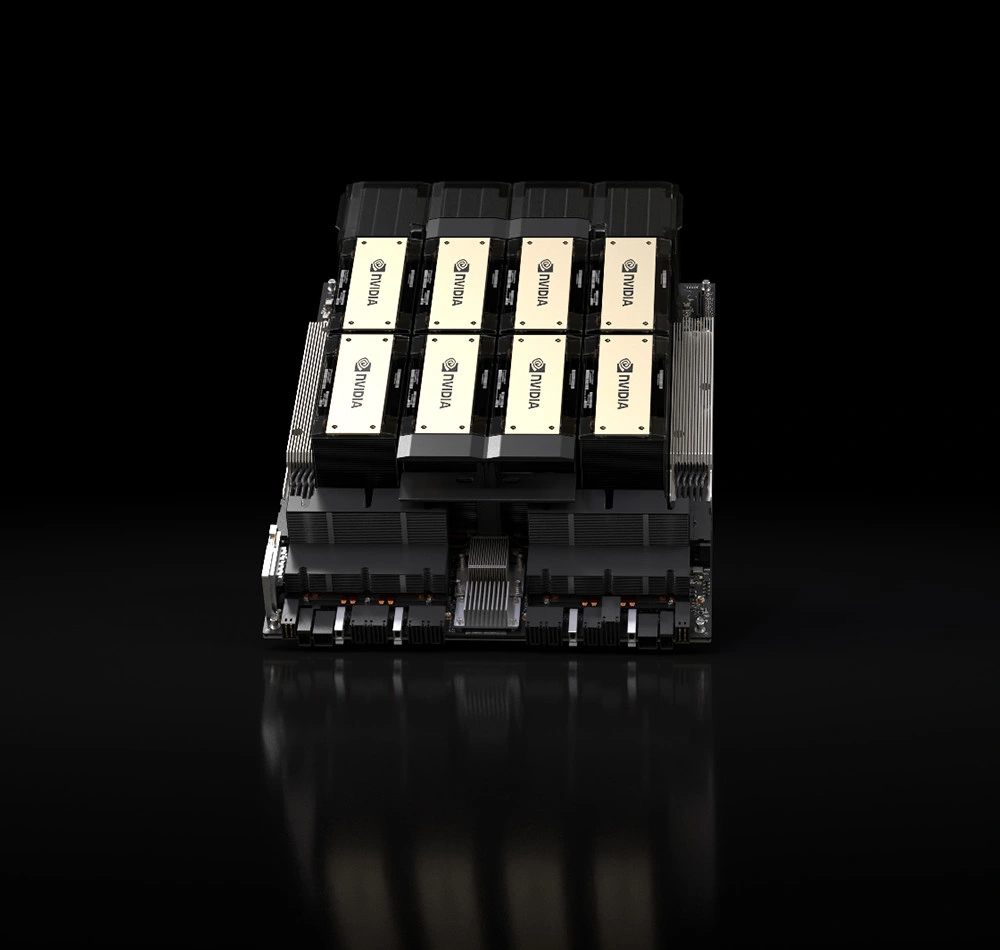

NVIDIA GH200 Grace Hopper Superchip

The NVIDIA GH200 Grace Hopper Superchip is a cutting-edge AI and high-performance computing (HPC) processor designed for data centers, AI training, large-scale inferencing, and scientific computing. It combines an NVIDIA Grace CPU (based on ARM architecture) with an NVIDIA Hopper GPU in a single, high-bandwidth package, delivering unprecedented AI and HPC performance while optimizing power efficiency.

Key Features of NVIDIA GH200

✅ 1. CPU + GPU Integration

- NVIDIA Grace CPU: 72-core ARM-based processor designed for high-efficiency computing.

- NVIDIA Hopper GPU: AI-optimized GPU architecture supporting Tensor Cores and Transformer Engine for deep learning and scientific computing.

- Coherent Memory Architecture: Uses NVLink-C2C to integrate the CPU and GPU with 900GB/s bandwidth, enabling seamless data sharing.

✅ 2. Memory & Bandwidth

- Up to 576GB LPDDR5X memory with ECC (Error-Correcting Code).

- Up to 1.2TB/s memory bandwidth—far exceeding traditional CPU-GPU configurations.

- HBM3 (High-Bandwidth Memory 3) support for ultra-fast AI model training and inferencing.

✅ 3. Performance & AI Capabilities

- Up to 40X faster AI inference for LLMs (Large Language Models) compared to previous-generation GPUs.

- Optimized for HPC workloads, including weather simulation, drug discovery, and financial modeling.

- Supports FP8, FP16, TF32, and INT8 AI calculations to enhance deep learning performance.

✅ 4. Scalability & Networking

- NVLink-C2C interconnect for multi-GPU scaling.

- Supports NVIDIA Quantum-2 InfiniBand and NVIDIA BlueField-3 DPUs for high-speed networking in cloud and on-premise data centers.

- Can be used in MGX modular server architectures, allowing flexible system configurations.

✅ 5. Energy Efficiency & Sustainability

- 2X energy efficiency compared to x86-based CPU-GPU setups.

- Uses LPDDR5X memory, consuming 50% less power than traditional DDR5 memory.

Reviews

There are no reviews yet.