Wholesale High Performance NVIDIA HGX H100/NVIDIA HGX-2/NVIDIA HGX A800/NVIDIA HGX V100/HGX H100 eight-GPU Server

Here’s a detailed comparison table for the NVIDIA HGX platforms:

| Feature | NVIDIA HGX A100 | NVIDIA HGX H100 | NVIDIA HGX-2 | NVIDIA HGX A800 | NVIDIA HGX V100 |

|---|---|---|---|---|---|

| Release Year | 2020 | 2022 | 2018 | 2022 | 2017 |

| GPU Architecture | Ampere (A100) | Hopper (H100) | Volta | Ampere (A800, China-specific) | Volta |

| Number of GPUs | 4 or 8 | 4 or 8 | 16 | 4 or 8 | 4 or 8 |

| GPU Memory | 40 GB or 80 GB per GPU | 80 GB per GPU | 32 GB per GPU | 40 GB or 80 GB per GPU | 16 GB or 32 GB per GPU |

| Total GPU Memory | Up to 640 GB | Up to 640 GB | 512 GB | Up to 640 GB | Up to 256 GB |

| Memory Type | HBM2 | HBM3 | HBM2 | HBM2 | HBM2 |

| Performance (FP16) | Up to 10 PFLOPS | Up to 32 PFLOPS | 2 PFLOPS | Slightly lower than A100 | Up to 125 TFLOPS |

| Performance (FP8) | Supported | Fully supported | Not supported | Not supported | Not supported |

| Interconnect | NVSwitch + NVLink | NVSwitch + NVLink | NVSwitch + NVLink | NVSwitch + NVLink | NVLink |

| Networking | PCIe Gen4 | PCIe Gen5 | PCIe + NVSwitch | PCIe Gen4 | PCIe Gen3 |

| Power Consumption | ~6–10 kW | ~6–10 kW | ~10 kW | ~6–10 kW | ~3–5 kW |

| Use Cases | AI training, inference | Generative AI, HPC | Large-scale AI, HPC | AI, HPC (export-restricted) | AI research, HPC |

| Cooling System | Air or Liquid-cooled | Liquid-cooled | Air-cooled | Air or Liquid-cooled | Air-cooled |

| Price (USD) | $150,000–$200,000 | $250,000–$400,000 | ~$400,000 | $120,000–$180,000 | $75,000–$150,000 |

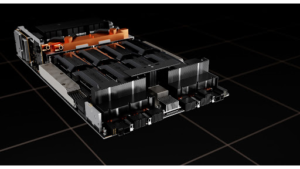

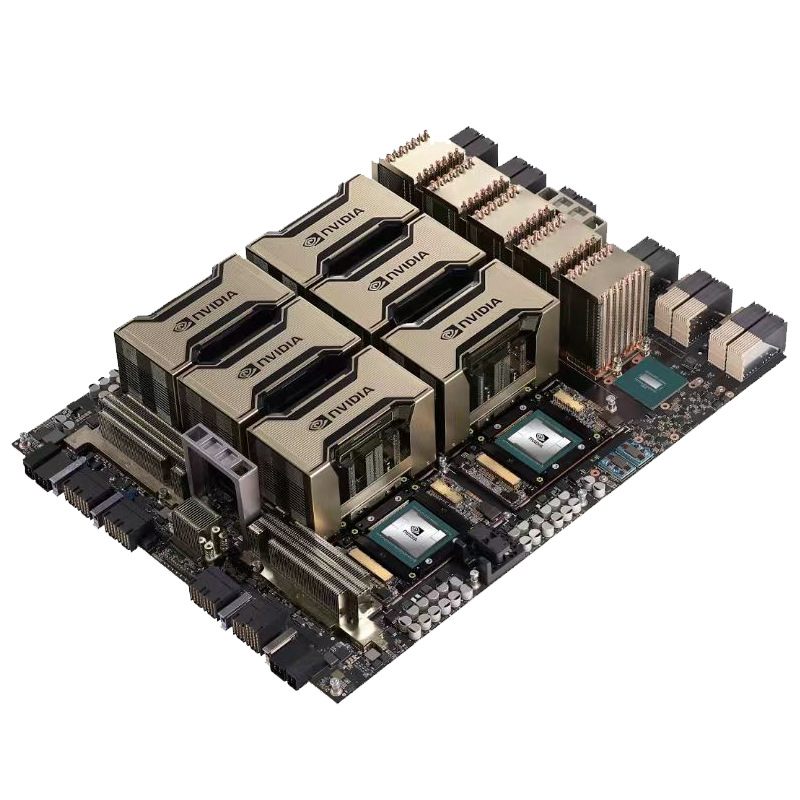

NVIDIA HGX A100

The NVIDIA HGX A100 is a high-performance computing (HPC) and AI platform built on NVIDIA’s Ampere architecture. It supports 4 or 8 A100 GPUs, offering up to 10 PFLOPS of FP16 performance. With HBM2 memory (40 GB or 80 GB per GPU) and NVSwitch/NVLink interconnects, it enables efficient multi-GPU communication for data-intensive workloads.

- Use Case: AI training, large-scale inference, HPC, and data analytics.

- Features:

- Flexible configurations for multi-tenancy.

- Support for mixed-precision calculations (FP64, FP32, FP16, and INT8).

- Cooling Options: Available in air-cooled and liquid-cooled configurations.

- Ideal For: Enterprises needing high scalability and performance for AI and HPC workloads.

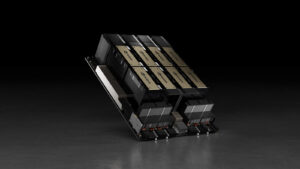

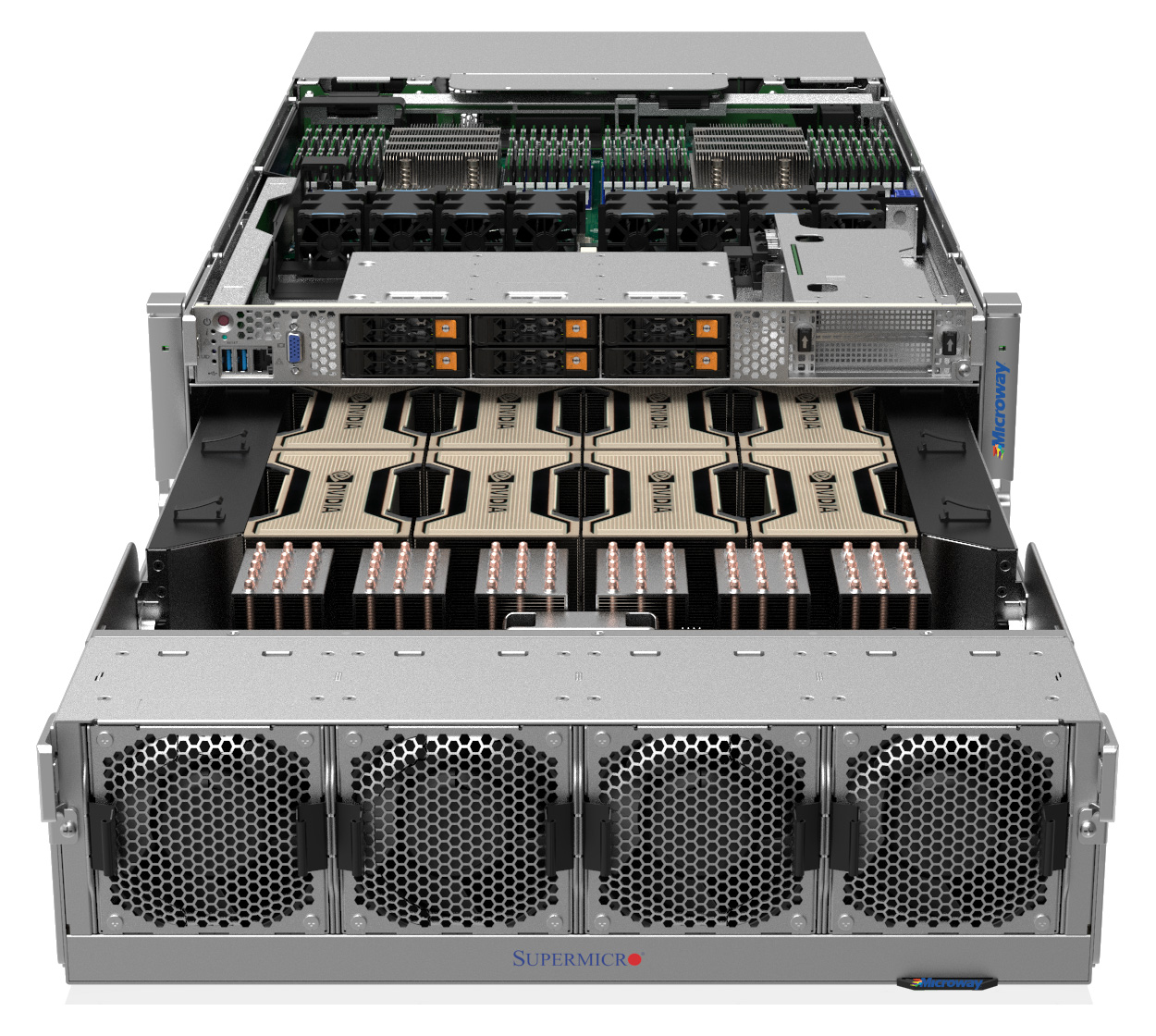

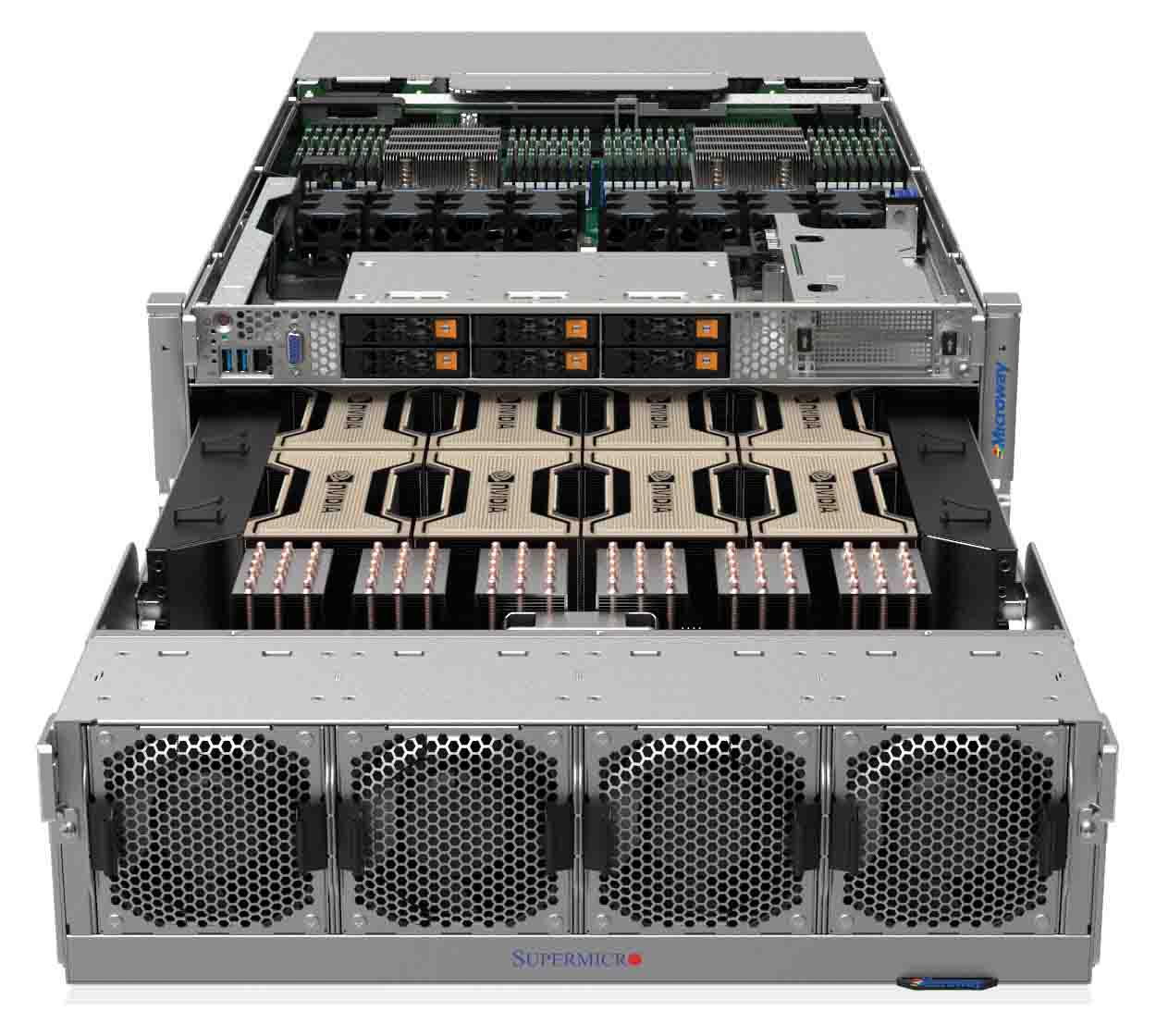

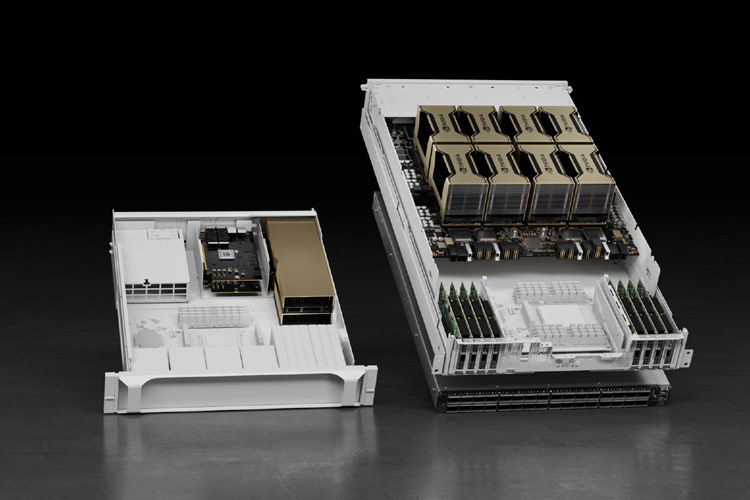

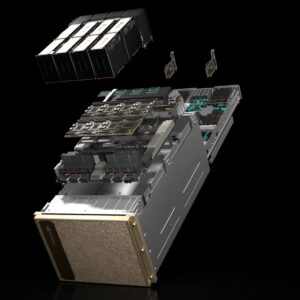

NVIDIA HGX H100

The NVIDIA HGX H100 is the latest and most powerful platform in the HGX series, featuring the Hopper architecture. It uses 4 or 8 H100 GPUs with 80 GB HBM3 memory per GPU, delivering up to 32 PFLOPS of FP8 performance. Enhanced NVSwitch and PCIe Gen5 support ensure unparalleled interconnect bandwidth.

- Use Case: Generative AI, exascale HPC, large-scale AI training, and transformer-based models.

- Features:

- Industry-leading memory bandwidth with HBM3.

- Advanced precision modes like FP8 for cutting-edge AI applications.

- Cooling: Liquid-cooled for optimal thermal management.

- Ideal For: Organizations developing generative AI models or conducting large-scale scientific simulations.

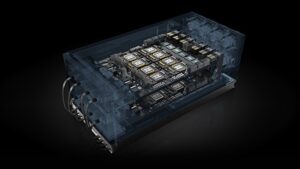

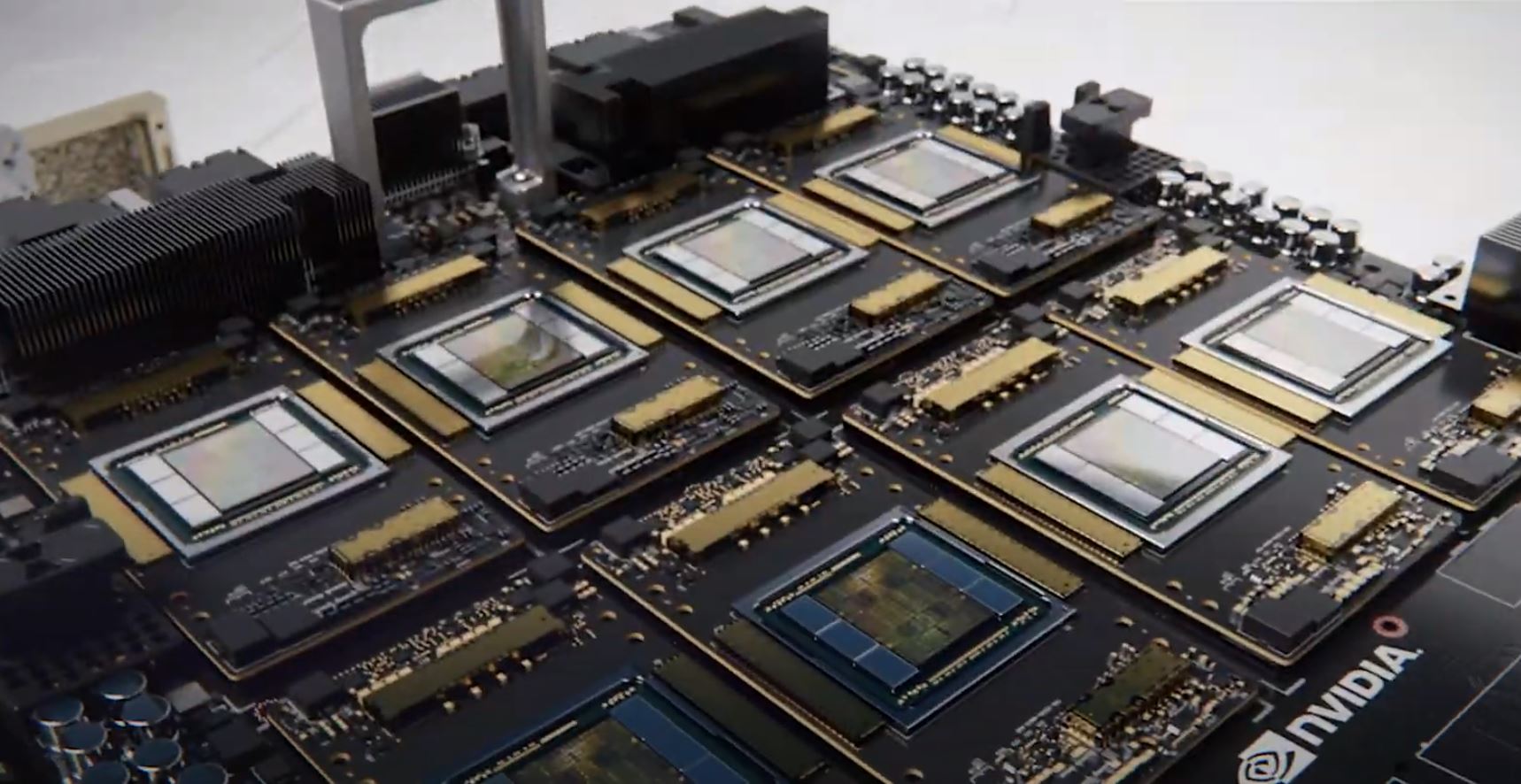

NVIDIA HGX-2

The NVIDIA HGX-2 is a legacy powerhouse featuring 16 NVIDIA Volta GPUs. It was one of the first platforms to introduce NVSwitch, enabling seamless communication across all GPUs. With 2 PFLOPS of FP16 performance and 512 GB of HBM2 memory, it supported large-scale AI training and HPC applications during its peak.

- Use Case: Legacy AI training and HPC workloads.

- Features:

- Pioneering NVSwitch technology for GPU-to-GPU interconnect.

- Cooling: Air-cooled for efficient deployment in data centers.

- Ideal For: Enterprises with established HPC and AI systems requiring Volta-based performance.

NVIDIA HGX A800

The NVIDIA HGX A800 is a variant of the HGX A100 tailored for the Chinese market to comply with export restrictions. It features 4 or 8 A800 GPUs (Ampere-based), each with 40 GB or 80 GB of HBM2 memory, offering performance slightly below the A100 due to capped FP32 and FP16 throughput.

- Use Case: AI training and inference in regions with restricted hardware access.

- Features:

- Similar architecture to HGX A100 but performance-optimized for specific regulations.

- Cooling Options: Air-cooled or liquid-cooled.

- Ideal For: Enterprises in China requiring advanced AI capabilities under compliance.

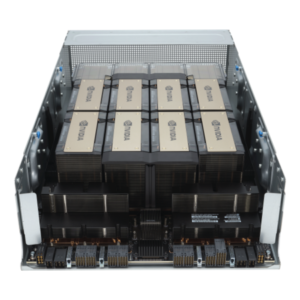

NVIDIA HGX V100

The NVIDIA HGX V100 is an earlier-generation HPC and AI platform built on NVIDIA’s Volta architecture. It supports 4 or 8 V100 GPUs, delivering up to 125 TFLOPS of FP16 performance. Designed for scalability and flexibility, it utilizes NVLink to enable high-speed GPU communication.

- Use Case: AI research, HPC simulations, and legacy AI workloads.

- Features:

- Proven reliability for diverse AI and HPC use cases.

- Cooling: Air-cooled for efficient deployment in enterprise data centers.

- Ideal For: Organizations with existing AI and HPC infrastructure needing Volta-based GPUs.

Reviews

There are no reviews yet.