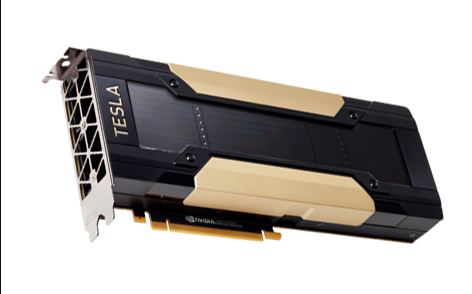

Brand new NVIDIA Tesla V100 PCIe 32 GB Tesla V100 PCIe V100 SXM2 Gaming Laptop or Desktop 149 Teraflops DVI Interface Graphic Card

Here’s a detailed comparison table for NVIDIA Tesla V100 32GB, NVIDIA Tesla V100 16GB, NVIDIA Tesla V100 PCIe, and NVIDIA Tesla V100 SXM2:

| Feature | NVIDIA Tesla V100 32GB | NVIDIA Tesla V100 16GB | NVIDIA Tesla V100 PCIe | NVIDIA Tesla V100 SXM2 |

|---|---|---|---|---|

| Architecture | NVIDIA Volta | NVIDIA Volta | NVIDIA Volta | NVIDIA Volta |

| Memory Capacity | 32GB HBM2 | 16GB HBM2 | 16GB or 32GB HBM2 | 16GB or 32GB HBM2 |

| Memory Bandwidth | 900 GB/s | 900 GB/s | 900 GB/s | 900 GB/s |

| CUDA Cores | 5,120 | 5,120 | 5,120 | 5,120 |

| Tensor Cores | 640 | 640 | 640 | 640 |

| Interface | SXM2 | SXM2 | PCIe Gen3 | SXM2 |

| Form Factor | SXM2 module | SXM2 module | PCIe (dual-slot) | SXM2 module |

| Power Consumption | 300W | 300W | 250W | 300W |

| Peak FP32 Performance | 15.7 TFLOPS | 15.7 TFLOPS | 15.7 TFLOPS | 15.7 TFLOPS |

| Peak FP64 Performance | 7.8 TFLOPS | 7.8 TFLOPS | 7.8 TFLOPS | 7.8 TFLOPS |

| Peak INT8 Performance | 125 TFLOPS | 125 TFLOPS | 125 TFLOPS | 125 TFLOPS |

| Target Workload | AI/ML, HPC, Data Analytics | AI/ML, HPC, Data Analytics | AI/ML, HPC, Data Analytics | AI/ML, HPC, Data Analytics |

| Key Features | NVLink support for fast GPU interconnects | NVLink support for fast GPU interconnects | PCIe standard connectivity | High bandwidth via NVLink |

NVIDIA Tesla V100 32GB

The NVIDIA Tesla V100 32GB, built on the revolutionary NVIDIA Volta architecture, is a high-performance GPU designed to accelerate AI, machine learning, deep learning, and HPC workloads. Featuring 5,120 CUDA cores and 640 Tensor Cores, it delivers up to 15.7 TFLOPS of FP32 performance and 125 TFLOPS of INT8 performance for mixed-precision tasks. The 32GB HBM2 memory provides 900 GB/s of bandwidth, making it ideal for memory-intensive applications like large-scale neural network training and simulations. With NVLink technology, it offers ultra-fast GPU-to-GPU communication, enabling seamless scaling for demanding workloads in data centers and research environments.

NVIDIA Tesla V100 16GB

The NVIDIA Tesla V100 16GB is a versatile GPU that delivers the same compute performance as its 32GB counterpart but with a reduced memory capacity. With 16GB of HBM2 memory and 900 GB/s of bandwidth, it is optimized for AI/ML training, data analytics, and HPC tasks that do not require extensive memory. Equipped with 5,120 CUDA cores and 640 Tensor Cores, it provides exceptional computational capabilities, supporting up to 125 TFLOPS of INT8 performance. It is a cost-effective solution for enterprises and researchers seeking high-performance GPU acceleration without the additional cost of larger memory capacity.

NVIDIA Tesla V100 PCIe

The NVIDIA Tesla V100 PCIe is a PCIe form-factor GPU designed to bring the power of Volta architecture to traditional server environments. Available in 16GB or 32GB HBM2 configurations, it offers the same compute and memory performance as the SXM2 version but with a lower power consumption of 250W. With 5,120 CUDA cores, 640 Tensor Cores, and support for up to 125 TFLOPS of INT8 performance, it accelerates AI/ML, HPC, and data science workflows. Its PCIe interface ensures compatibility with a wide range of servers, making it a flexible choice for organizations looking to scale GPU computing across existing infrastructure.

NVIDIA Tesla V100 SXM2

The NVIDIA Tesla V100 SXM2 is engineered for high-density GPU server environments and NVIDIA DGX systems. Featuring 5,120 CUDA cores and 640 Tensor Cores, it delivers up to 15.7 TFLOPS of FP32 performance, 7.8 TFLOPS of FP64 performance, and 125 TFLOPS of INT8 performance. The SXM2 form factor allows for enhanced cooling and thermal efficiency, while NVLink technology provides high-speed interconnects between GPUs, enabling multi-GPU scalability with bandwidth of up to 300 GB/s per link. Available in both 16GB and 32GB HBM2 configurations, the Tesla V100 SXM2 is ideal for AI training, deep learning inference, and large-scale HPC simulations.

Reviews

There are no reviews yet.