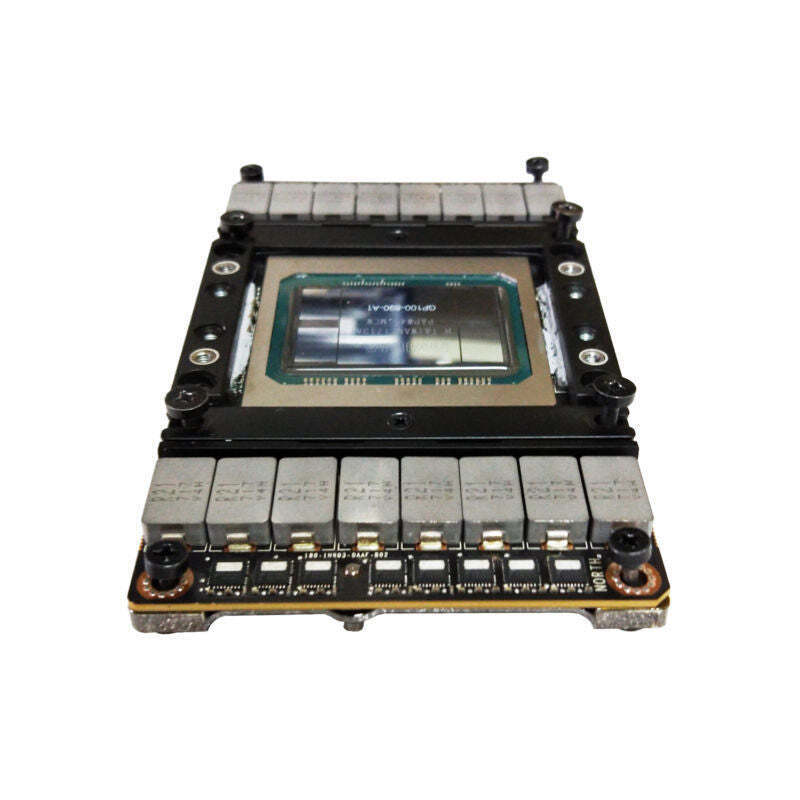

Wholesale Brand new Nvidia Tesla P100 16GB 12GB PCIE P100 SXM2 P40 P4 GPU HBM2 video accelerator card for ChatGPT AI HPC Data graphic card

Here’s a detailed comparison table for Tesla P100 16GB PCIe, Tesla P100 12GB PCIe, Tesla P100 SXM2, Tesla P40, and Tesla P4:

| Feature | Tesla P100 16GB PCIe | Tesla P100 12GB PCIe | Tesla P100 SXM2 | Tesla P40 | Tesla P4 |

|---|---|---|---|---|---|

| Architecture | NVIDIA Pascal | NVIDIA Pascal | NVIDIA Pascal | NVIDIA Pascal | NVIDIA Pascal |

| Memory Capacity | 16GB HBM2 | 12GB HBM2 | 16GB HBM2 | 24GB GDDR5 | 8GB GDDR5 |

| Memory Bandwidth | 732 GB/s | 549 GB/s | 732 GB/s | 346 GB/s | 192 GB/s |

| CUDA Cores | 3,584 | 3,584 | 3,584 | 3,840 | 2,560 |

| Tensor Cores | N/A | N/A | N/A | N/A | N/A |

| Interface | PCIe Gen3 | PCIe Gen3 | SXM2 | PCIe Gen3 | PCIe Gen3 |

| Form Factor | Dual-slot | Dual-slot | SXM2 | Dual-slot | Single-slot |

| Power Consumption | 250W | 250W | 300W | 250W | 50W |

| Peak FP32 Performance | 10.6 TFLOPS | 9.3 TFLOPS | 10.6 TFLOPS | 12 TFLOPS | 5.5 TFLOPS |

| Peak FP64 Performance | 5.3 TFLOPS | 4.7 TFLOPS | 5.3 TFLOPS | 0.37 TFLOPS | 0.17 TFLOPS |

| Target Workload | HPC, AI/ML, data analytics | HPC, AI/ML, data analytics | HPC, AI/ML, data analytics | Deep learning inference | Deep learning inference |

| Key Features | High memory capacity, ECC support | Balanced performance with reduced memory | High-performance NVLink support | Optimized for deep learning inference | Energy-efficient AI/ML inference |

| Use Case | High-performance computing, large datasets | HPC with moderate memory requirements | HPC with ultra-fast inter-GPU communication | AI/ML inference for large models | Edge AI/ML inference, low-power environments |

Reviews

There are no reviews yet.