Wholesale Radeon Instinct MI25 16GB MI50 MI60 62GB MI100 MI200 128GB PCIE interface gpu graphic card

Here’s a detailed comparison table for AMD’s Radeon Instinct series GPUs: MI25, MI50, MI60, MI100, and MI200.

| Specification | Radeon Instinct MI25 | Radeon Instinct MI50 | Radeon Instinct MI60 | Radeon Instinct MI100 | Radeon Instinct MI200 |

|---|---|---|---|---|---|

| Architecture | Vega 10 | Vega 20 | Vega 20 | CDNA | CDNA 2 |

| GPU Memory | 16 GB HBM2 | 16 GB HBM2 | 32 GB HBM2 | 32 GB HBM2 | Up to 128 GB HBM2e |

| Memory Bandwidth | 484 GB/s | 1,024 GB/s | 1,024 GB/s | 1,228 GB/s | Up to 3,276 GB/s |

| Compute Performance | – FP32: 12.3 TFLOPS – FP16: 24.6 TFLOPS |

– FP64: 6.7 TFLOPS – FP32: 13.4 TFLOPS – FP16: 26.8 TFLOPS |

– FP64: 7.4 TFLOPS – FP32: 14.7 TFLOPS – FP16: 29.5 TFLOPS |

– FP64: 11.5 TFLOPS – FP32: 23.1 TFLOPS – FP16: 46.1 TFLOPS – BF16: 46.1 TFLOPS |

– FP64: Up to 95.7 TFLOPS – FP32: Up to 191.5 TFLOPS – FP16: Up to 383 TFLOPS – BF16: Up to 383 TFLOPS |

| TDP (Thermal Design Power) | 300 W | 300 W | 300 W | 300 W | Up to 500 W |

| Interface | PCIe 3.0 x16 | PCIe 3.0 x16 | PCIe 3.0 x16 | PCIe 4.0 x16 | OAM (Open Accelerator Module) or PCIe 4.0 x16 |

| Release Year | 2017 | 2018 | 2018 | 2020 | 2021 |

| Approximate Market Price | – New: Not widely available – Used: ~$399 |

– New: Not widely available – Used: ~$163 |

– New: MSRP ~$13,465 – Used: Varies |

– New: Not widely available – Used: Varies |

– New: Not widely available – Used: Varies |

Descriptions for AMD Radeon Instinct Series GPUs

Radeon Instinct MI25

- Overview:

Released in 2017, the Radeon Instinct MI25 was AMD’s first deep-learning and HPC accelerator leveraging the Vega 10 architecture. It features 16GB of HBM2 memory and delivers up to 12.3 TFLOPS of FP32 compute performance. The MI25 focuses on AI training, inference workloads, and general-purpose GPU computing. - Key Features:

- 16GB HBM2 memory with 484GB/s bandwidth

- FP32 compute performance of 12.3 TFLOPS and FP16 of 24.6 TFLOPS

- Power-efficient design with a TDP of 300W

- PCIe 3.0 x16 interface

- Use Cases:

AI inference, general HPC tasks, and machine learning model training in data centers.

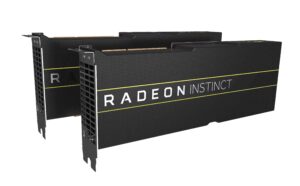

Radeon Instinct MI50

- Overview:

The Radeon Instinct MI50, introduced in 2018, is built on the Vega 20 architecture and offers enhanced compute performance and memory bandwidth compared to its predecessor. It features 16GB of HBM2 memory, making it suitable for AI training and large-scale simulations. - Key Features:

- 16GB HBM2 memory with 1,024GB/s bandwidth

- FP32 compute performance of 13.4 TFLOPS and FP16 of 26.8 TFLOPS

- Advanced Vega 20 architecture with better efficiency

- TDP of 300W and PCIe 3.0 x16 support

- Use Cases:

AI training, scientific simulations, and financial modeling tasks.

Radeon Instinct MI60

- Overview:

The Radeon Instinct MI60 is a high-performance GPU also based on the Vega 20 architecture but offers double the memory capacity of the MI50. With 32GB of HBM2 memory and superior compute capabilities, the MI60 is designed for large-scale HPC and AI workloads requiring high precision. - Key Features:

- 32GB HBM2 memory with 1,024GB/s bandwidth

- FP64 compute performance of 7.4 TFLOPS, FP32 of 14.8 TFLOPS, and FP16 of 29.5 TFLOPS

- High-performance computing for scientific simulations

- PCIe 3.0 x16 interface and 300W TDP

- Use Cases:

Large-scale AI training, high-precision scientific research, and weather modeling.

Radeon Instinct MI100

- Overview:

The MI100 is AMD’s first GPU based on the CDNA architecture, tailored for AI training and HPC workloads. It introduces 32GB of HBM2 memory and a PCIe 4.0 interface, offering enhanced memory bandwidth and compute performance. The MI100 is highly efficient, delivering up to 11.5 TFLOPS of FP64 compute performance. - Key Features:

- 32GB HBM2 memory with 1,228GB/s bandwidth

- FP64 compute performance of 11.5 TFLOPS and FP16/BF16 of 184.6 TFLOPS

- Optimized CDNA architecture for AI and HPC workloads

- PCIe 4.0 x16 support with a TDP of 300W

- Use Cases:

AI model training, high-precision simulations, and computational physics.

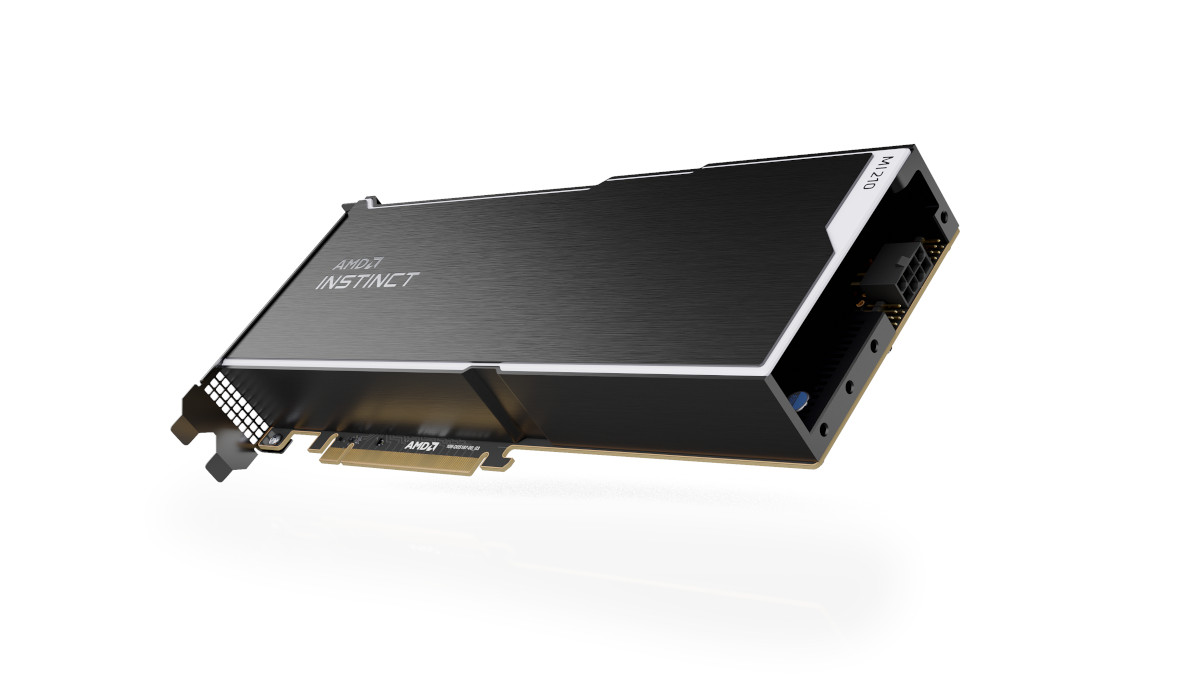

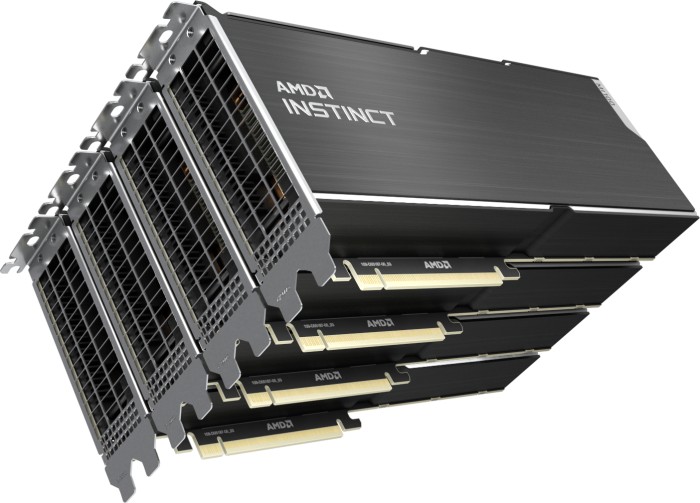

Radeon Instinct MI200 Series

- Overview:

The Radeon Instinct MI200 series is the pinnacle of AMD’s AI and HPC GPU lineup, leveraging the CDNA 2 architecture. Available in configurations such as the MI210, MI250, and MI250X, the series offers up to 128GB of HBM2e memory and unprecedented compute performance for large-scale AI and HPC workloads. - Key Features:

- Up to 128GB HBM2e memory with 3,276GB/s bandwidth

- FP64 compute performance of up to 95.7 TFLOPS and FP16/BF16 of up to 383 TFLOPS

- Modular OAM form factor for data centers or PCIe 4.0 x16 compatibility

- TDP of up to 500W

- Use Cases:

Exascale computing, AI supercomputing, large-scale simulations, and cloud AI applications.

Reviews

There are no reviews yet.