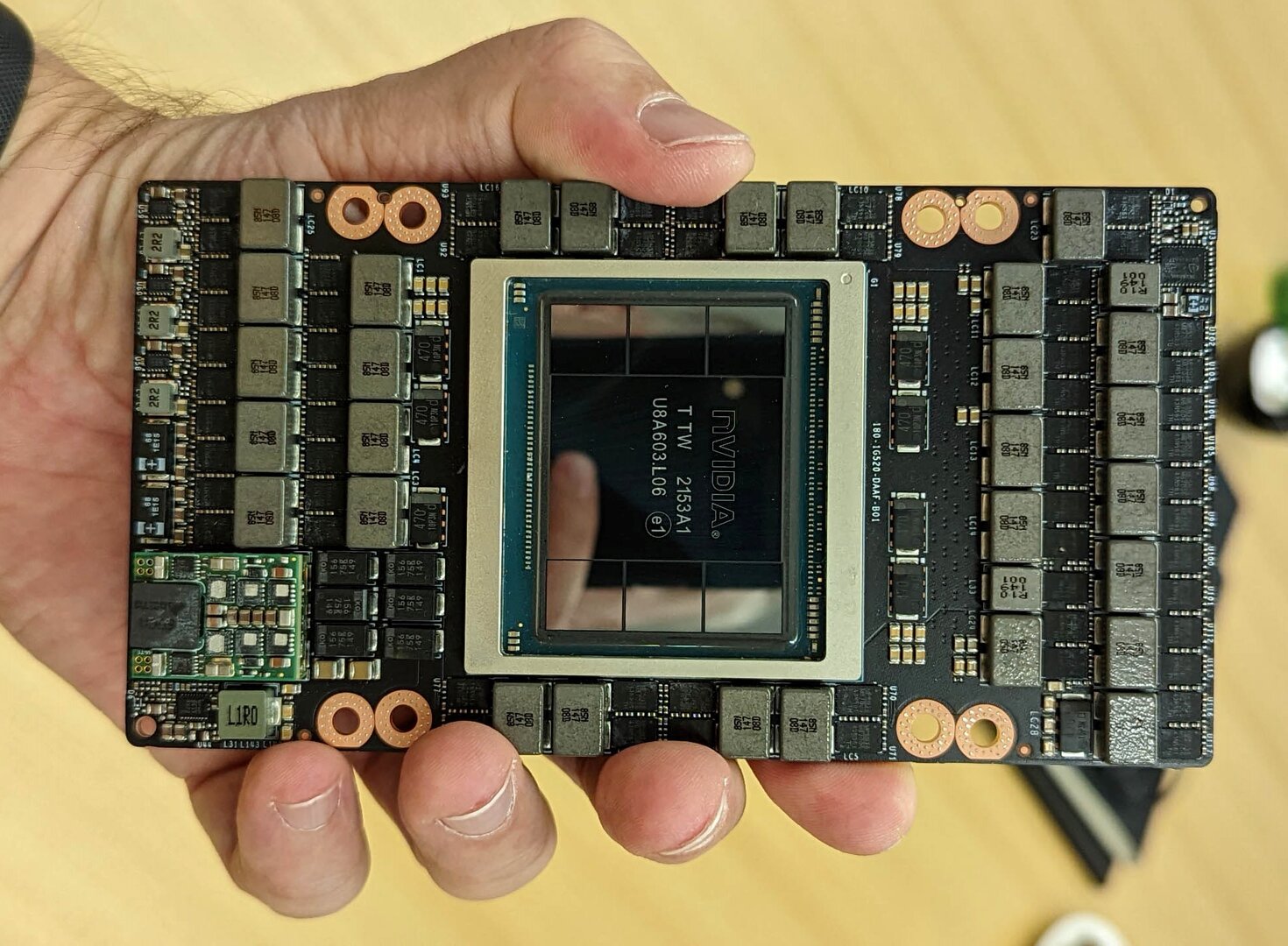

Brand new Nvidia H100 PCIE 80GB 48GB H100 SXM H100 CNX AND h100 NVL AI training and inference HPC GPU graphic card

Here’s a detailed comparison of NVIDIA’s H100 series GPUs, focusing on the PCIe 80GB, PCIe 48GB, SXM, CNX, and NVL models.

| Specification | H100 PCIe 80GB | H100 PCIe 48GB | H100 SXM | H100 CNX | H100 NVL |

|---|---|---|---|---|---|

| Form Factor | Dual-slot PCIe card | Dual-slot PCIe card | SXM Module | PCIe card with integrated ConnectX-7 NIC | Dual PCIe cards with NVLink bridge |

| Memory | 80GB HBM3 | 48GB HBM3 | 80GB HBM3 | 80GB HBM3 | 188GB HBM3 (94GB per card) |

| Memory Bandwidth | 2 TB/s | 1.5 TB/s | 3 TB/s | 2 TB/s | 7 TB/s (combined) |

| Compute Performance | – FP64: 67 TFLOPS – FP32: 67 TFLOPS – Tensor (FP16/TF32): ~2 PFLOPS |

– FP64: Slightly lower than 80GB version – FP32: Slightly lower – Tensor: Slightly lower |

– FP64: 67 TFLOPS – FP32: 67 TFLOPS – Tensor: ~3 PFLOPS |

Similar to H100 PCIe 80GB | – FP64: Combined performance – FP32: Combined performance – Tensor: ~4 PFLOPS (combined) |

| Power Consumption | 350W | 300W | Up to 700W | 350W | 700W (combined) |

| Interface | PCIe 5.0 | PCIe 5.0 | SXM5 | PCIe 5.0 with integrated networking | PCIe 5.0 with NVLink bridge |

| Target Applications | High-performance computing, AI training and inference | AI inference, medium-scale training | Large-scale AI training, HPC | AI workloads requiring high-speed networking | Large language model inference, massive AI workloads |

| Approximate Price | $30,970.79 | Not widely available; expected to be lower than 80GB version | Approximately $34,768 | Pricing varies; consult NVIDIA partners | Approximately $44,999 |

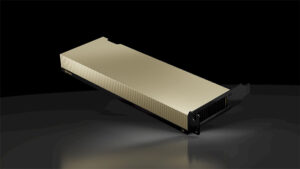

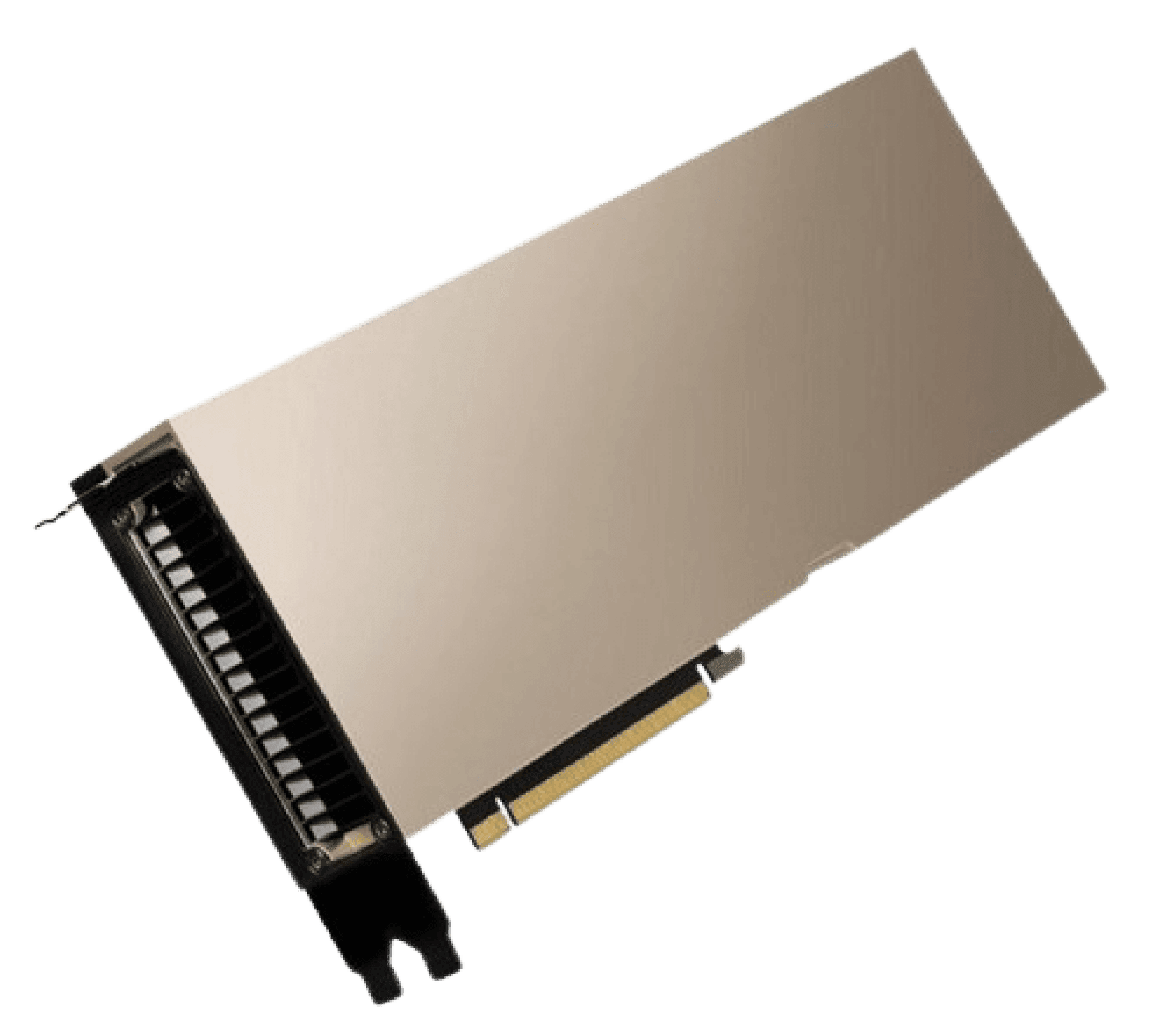

NVIDIA H100 PCIe 80GB

The H100 PCIe 80GB is a high-performance GPU designed for AI training, inference, and HPC workloads. Equipped with 80GB of HBM3 memory and a memory bandwidth of 2TB/s, it supports large-scale data processing and complex model training. The PCIe 5.0 interface ensures compatibility with a wide range of servers and workstations, making it an ideal choice for general-purpose high-performance computing. With a power consumption of 350W, it delivers 67 TFLOPS of FP64 performance and up to 2 PFLOPS of tensor core acceleration.

NVIDIA H100 PCIe 48GB

The H100 PCIe 48GB offers a cost-effective alternative to its 80GB counterpart, designed for medium-scale AI and inference tasks. It features 48GB of HBM3 memory with a memory bandwidth of 1.5TB/s, balancing performance and power efficiency. Its PCIe 5.0 interface ensures compatibility with standard server infrastructures. Consuming just 300W of power, it delivers reliable performance for AI inference, lightweight training workloads, and smaller-scale HPC applications.

NVIDIA H100 SXM

The H100 SXM is the flagship model in the H100 series, built for large-scale AI training and HPC tasks. It boasts 80GB of HBM3 memory with an unmatched 3TB/s memory bandwidth and peak tensor performance of 3 PFLOPS. The SXM form factor allows it to leverage up to 700W of power, maximizing compute efficiency. Designed for deployment in data centers with SXM-compatible servers, it is tailored for extreme workloads like 8K video processing, large language models, and real-time analytics.

NVIDIA H100 CNX

The H100 CNX combines the power of the H100 GPU with NVIDIA’s ConnectX-7 networking technology. This unique integration enables high-speed networking alongside 80GB of HBM3 memory with a bandwidth of 2TB/s. The PCIe 5.0 form factor ensures easy deployment in data centers requiring efficient communication between distributed systems. With 350W power consumption, the H100 CNX is ideal for workloads like distributed training, high-performance networking, and AI inference requiring fast data exchange.

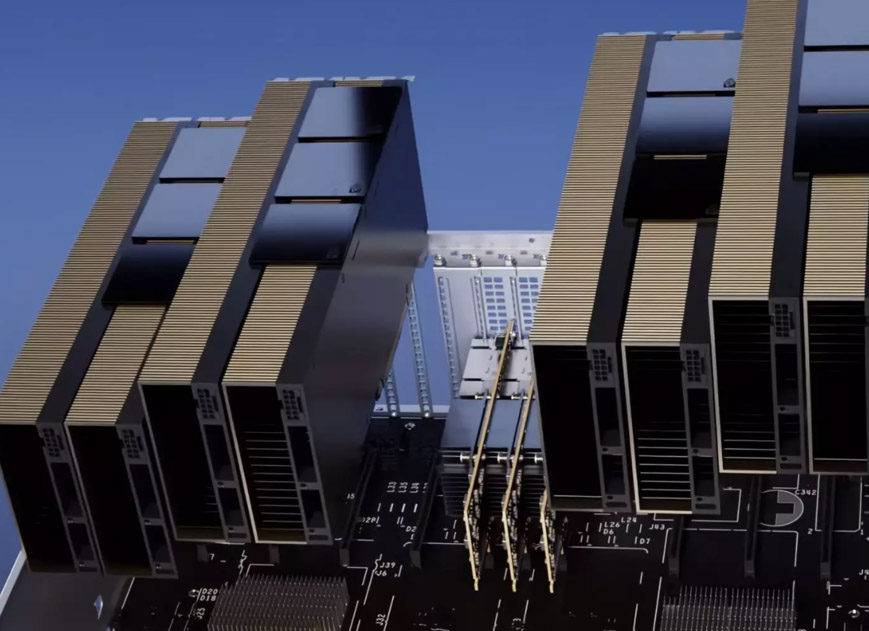

NVIDIA H100 NVL

The H100 NVL is designed for massive AI workloads and large language model inference. Featuring a dual-GPU configuration linked via NVLink, it offers a combined memory capacity of 188GB HBM3 and an extraordinary 7TB/s memory bandwidth. It provides up to 4 PFLOPS of tensor performance, making it ideal for handling enormous datasets and highly complex models. With a total power consumption of 700W, the H100 NVL excels in delivering cutting-edge performance for next-gen AI applications.

Reviews

There are no reviews yet.