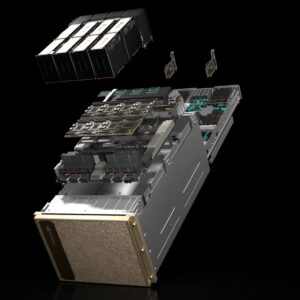

Wholesale 100% Brand new High-performance DGX-1/DGX station/DGX-2/DGX A100/DGX STATION A100/DGX H100/NVDGX H100 Super server AI training inference and high-performance computing server

Here’s a detailed comparison table for NVIDIA DGX Series models,

| Feature | DGX-1 | DGX Station | DGX-2 | DGX A100 | DGX Station A100 | DGX H100 |

|---|---|---|---|---|---|---|

| Release Year | 2016 | 2017 | 2018 | 2020 | 2020 | 2022 |

| GPU Architecture | Pascal/Volta | Volta | Volta | Ampere (A100) | Ampere (A100) | Hopper (H100) |

| Number of GPUs | 8 | 4 | 16 | 8 | 4 | 8 |

| GPU Memory | 16 GB per GPU | 16 GB per GPU | 32 GB per GPU | 40 GB/80 GB per GPU | 40 GB/80 GB per GPU | 80 GB per GPU |

| GPU Memory Type | GDDR5/X | HBM2 | HBM2 | HBM2 | HBM2 | HBM3 |

| Total GPU Memory | 128 GB | 64 GB | 512 GB | 640 GB/1,280 GB | 320 GB/640 GB | 640 GB |

| System Memory | 512 GB DDR4 | 256 GB DDR4 | 1.5 TB DDR4 | 1 TB DDR4 | 512 GB DDR4 | 2 TB DDR5 |

| Performance (FP16) | 960 TFLOPS | 480 TFLOPS | 2 PFLOPS | 5 PFLOPS | 2.5 PFLOPS | 32 PFLOPS |

| Performance (FP8) | Not supported | Not supported | Not supported | Supported (limited) | Supported (limited) | Fully supported |

| Storage | 4 x 1.92 TB SSD (RAID 0) | 2 x 1.92 TB SSD | 30 TB NVMe | 15 TB NVMe | 15 TB NVMe | 30 TB NVMe |

| Networking | 2 x 10 GbE | 1 x 10 GbE | 8 x 100 GbE | 8 x 200 GbE | 2 x 100 GbE | 8 x 200 GbE |

| Power Consumption | 3 kW | 1.5 kW | 10 kW | 6.5 kW | 1.5 kW | 10 kW |

| Cooling System | Air-cooled | Air-cooled | Air-cooled | Air or Liquid-cooled | Air-cooled | Liquid-cooled |

| Chassis Type | 3U Rackmount | Desktop Tower | 6U Rackmount | 6U Rackmount | Desktop Tower | 6U Rackmount |

| Weight | ~60 kg | ~25 kg | ~150 kg | ~134 kg | ~40 kg | ~150 kg |

| Operating System | Ubuntu-based DGX OS | Ubuntu-based DGX OS | Ubuntu-based DGX OS | Ubuntu-based DGX OS | Ubuntu-based DGX OS | Ubuntu-based DGX OS |

| Software Stack | NVIDIA CUDA, cuDNN, NCCL | NVIDIA CUDA, cuDNN, NCCL | NVIDIA CUDA, cuDNN, NCCL | NVIDIA CUDA, cuDNN, NCCL | NVIDIA CUDA, cuDNN, NCCL | NVIDIA CUDA, cuDNN, NCCL |

| Virtualization | NVIDIA GPU Cloud (NGC) | NVIDIA GPU Cloud (NGC) | NVIDIA GPU Cloud (NGC) | NVIDIA GPU Cloud (NGC) | NVIDIA GPU Cloud (NGC) | NVIDIA GPU Cloud (NGC) |

| Price (USD) | $129,000 | $49,999 | $399,000 | $199,000 | $99,000–$149,000 | ~$379,000 |

DGX-1

The DGX-1 is NVIDIA’s first-generation AI supercomputer in a 3U rackmount form factor. It features 8 GPUs based on the Pascal or Volta architecture, delivering up to 960 TFLOPS of FP16 performance. With 128 GB of HBM2 GPU memory and 512 GB of system memory, it is designed for deep learning, data science, and AI research workloads.

- Use Case: AI training, HPC simulations, and small-to-medium AI projects.

- Storage: 4 x 1.92 TB SSDs in RAID 0 for fast data access.

- Networking: 2 x 10 GbE ports for data transfer.

- Price: $129,000

DGX Station

The DGX Station is a desktop AI workstation designed for researchers and developers who require supercomputing capabilities without a data center. Equipped with 4 Volta GPUs, it delivers 480 TFLOPS of FP16 performance. Compact and air-cooled, it fits into an office environment.

- Use Case: AI development, prototyping, and smaller-scale model training.

- Storage: 2 x 1.92 TB SSDs.

- Networking: 1 x 10 GbE port.

- Price: $49,999

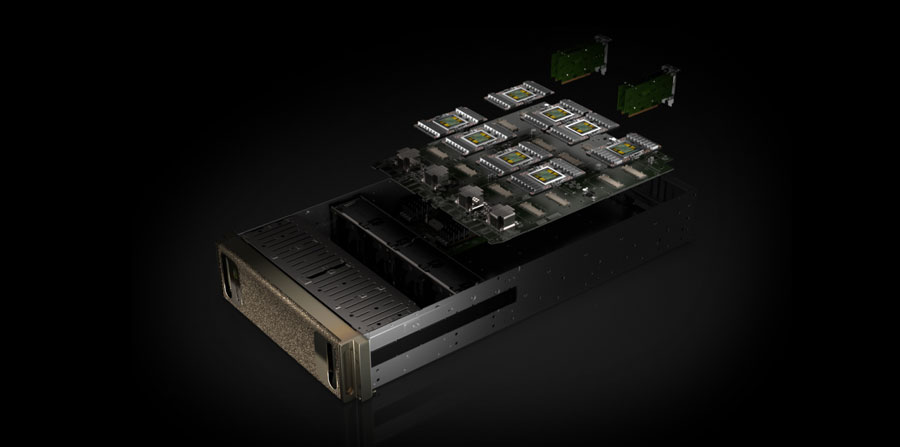

DGX-2

The DGX-2 represents a significant leap in performance with 16 Volta GPUs connected via NVIDIA NVSwitch, enabling all GPUs to communicate at their full bandwidth. It delivers up to 2 PFLOPS of FP16 performance, making it a powerhouse for large-scale AI training and inference.

- Use Case: Enterprise AI, HPC workloads, and large-scale AI model training.

- Storage: 30 TB NVMe for extensive data storage.

- Networking: 8 x 100 GbE ports for high-speed interconnects.

- Cooling: Air-cooled design for data center deployment.

- Price: $399,000

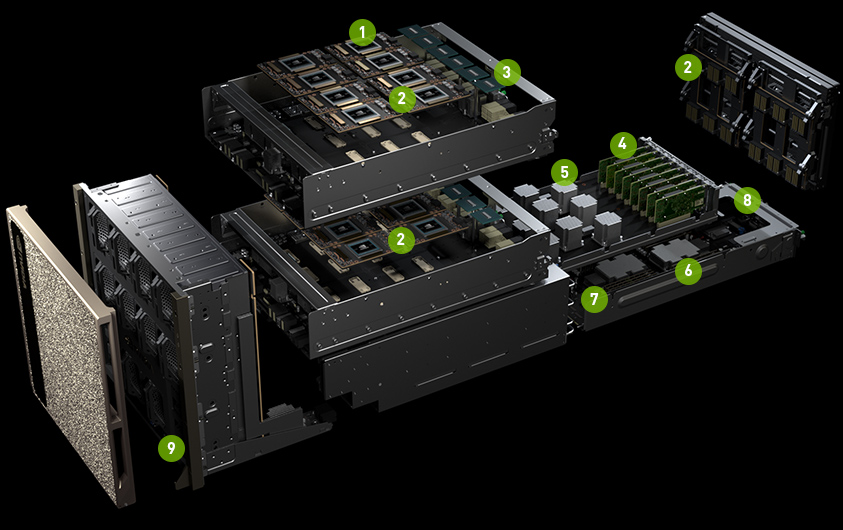

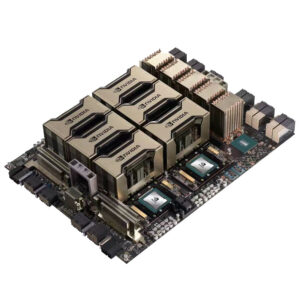

DGX A100

The DGX A100 is the first model to feature NVIDIA’s Ampere architecture GPUs, with 8 A100 GPUs delivering up to 5 PFLOPS of FP16 performance and support for FP8 precision. It provides flexible compute power and scalability, making it ideal for multi-tenancy environments.

- Use Case: AI training, HPC, and inference workloads in modern enterprise settings.

- Storage: 15 TB NVMe for fast data access.

- Networking: 8 x 200 GbE ports for high-speed networking.

- Cooling: Available in both air-cooled and liquid-cooled versions.

- Price: $199,000

DGX Station A100

The DGX Station A100 is the next-generation desktop AI workstation, offering 4 A100 GPUs with 40 GB or 80 GB of GPU memory per GPU. With 2.5 PFLOPS of FP16 performance, it is perfect for teams without access to data centers but requiring high-performance AI infrastructure.

- Use Case: AI prototyping, research, and small-scale AI development.

- Storage: 15 TB NVMe.

- Networking: 2 x 100 GbE ports for interconnects.

- Cooling: Air-cooled for quiet operation.

- Price: $99,000–$149,000

DGX H100

The DGX H100 is NVIDIA’s latest flagship AI supercomputer, featuring 8 Hopper H100 GPUs connected via NVLink for maximum bandwidth. It delivers up to 32 PFLOPS of FP8 performance, making it suitable for large-scale AI workloads and next-generation HPC.

- Use Case: Advanced AI training, generative AI, and exascale HPC.

- Storage: 30 TB NVMe.

- Networking: 8 x 200 GbE ports for massive data throughput.

- Cooling: Liquid-cooled for efficiency and thermal management.

- Price: ~$379,000

Reviews

There are no reviews yet.