Wholesale High Performance NVIDIA EGX Jetson Series/NVIDIA EGX A100/NVIDIA EGX H100/NVIDIA EGX V100/NVIDIA EGX Orin Series Server

Here’s a detailed comparison table for NVIDIA EGX platforms:

| Feature | NVIDIA EGX Jetson Series | NVIDIA EGX A100 | NVIDIA EGX H100 | NVIDIA EGX V100 | NVIDIA EGX Orin Series |

|---|---|---|---|---|---|

| Release Year | 2019 (Jetson Nano onwards) | 2020 | 2022 | 2017 | 2022 |

| GPU Architecture | Volta, Ampere (Jetson Orin) | Ampere (A100) | Hopper (H100) | Volta (V100) | Ampere (Orin) |

| Number of GPUs | 1 (single module) | 1–4 | 1–4 | 1–4 | 1–16 (multi-module setup) |

| GPU Memory | 4 GB–32 GB (module dependent) | 40 GB or 80 GB per GPU | 80 GB per GPU | 16 GB or 32 GB per GPU | 8 GB–64 GB per Orin module |

| Total GPU Memory | 4 GB–64 GB (scalable) | Up to 640 GB | Up to 640 GB | Up to 128 GB | Up to 1 TB (multi-Orin setups) |

| Performance (AI) | 0.5–275 TOPS | Up to 10 PFLOPS (FP16) | Up to 32 PFLOPS (FP8) | Up to 500 TFLOPS (FP16) | Up to 275 TOPS per module |

| Precision Modes | INT8, FP16 | FP64, FP32, FP16, INT8 | FP8, FP16, FP32, FP64 | FP64, FP32, FP16, INT8 | INT8, FP16 |

| Interconnect | Ethernet/Wi-Fi | PCIe Gen4, NVLink | PCIe Gen5, NVLink, NVSwitch | PCIe Gen3, NVLink | Ethernet, NVLink |

| Networking | Integrated Ethernet or Wi-Fi | PCIe or Ethernet | PCIe Gen5 + NVSwitch | PCIe Gen3 or Ethernet | Ethernet up to 25 Gbps/module |

| Power Consumption | 5W–40W | ~300W per GPU | ~350W per GPU | ~250W per GPU | 50W–200W per module |

| Cooling | Passive or active (module-specific) | Air-cooled or liquid-cooled | Liquid-cooled | Air-cooled | Passive or active cooling |

| Use Cases | IoT, robotics, smart cameras | AI training, real-time inference | Generative AI, large-scale inference | Legacy AI inference, HPC | Smart factories, retail analytics |

| Scalability | Low (single-module devices) | Medium (4 GPUs max) | High (H100 + NVSwitch) | Medium (4 GPUs max) | Very High (up to 16 modules) |

| Price (USD) | $99–$2,000 | $50,000–$150,000 | $100,000–$250,000 | $40,000–$120,000 | $1,500–$20,000 per module |

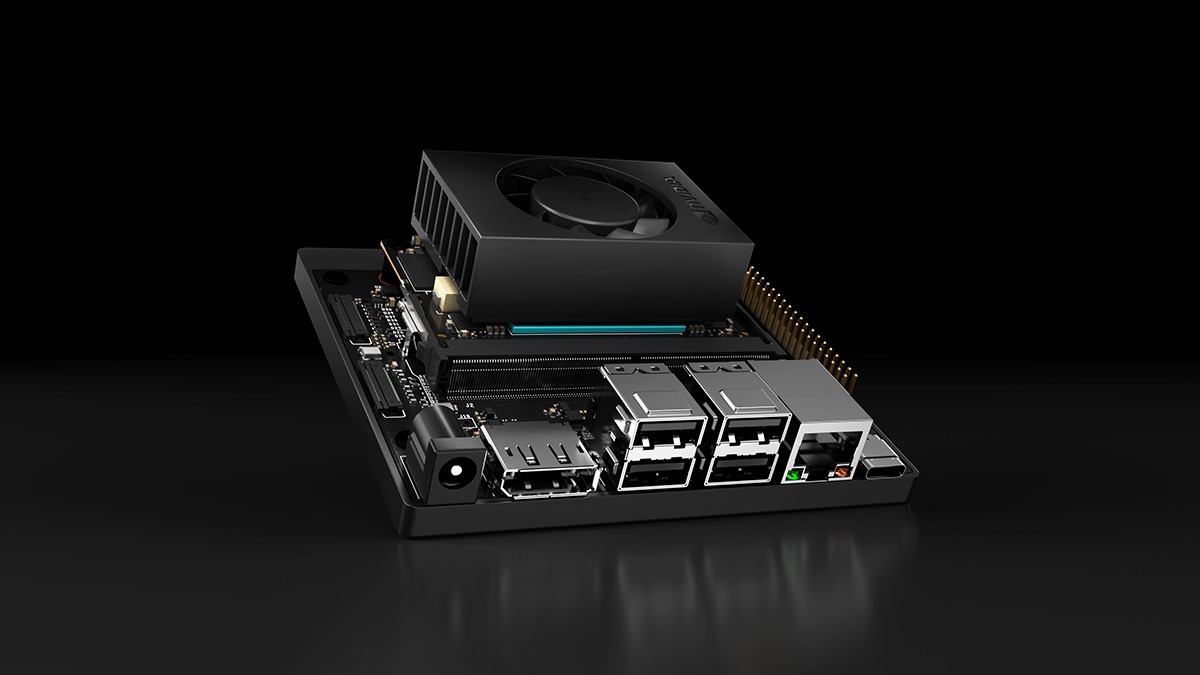

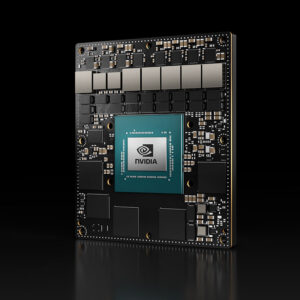

1. NVIDIA EGX Jetson Series

The NVIDIA EGX Jetson Series is a compact, energy-efficient edge AI platform designed for IoT, robotics, and smart devices. Powered by NVIDIA’s Volta and Ampere architectures, it offers lightweight AI processing with high scalability. With options like the Jetson Nano, Jetson Xavier NX, and Jetson Orin, the series provides up to 275 TOPS of AI performance in low-power devices ranging from 5W to 40W. Its small form factor and flexible design make it ideal for smart cameras, drones, autonomous robots, and other edge devices requiring real-time AI inference.

- Ideal For: Lightweight IoT applications, smart sensors, robotics, and AI in constrained environments.

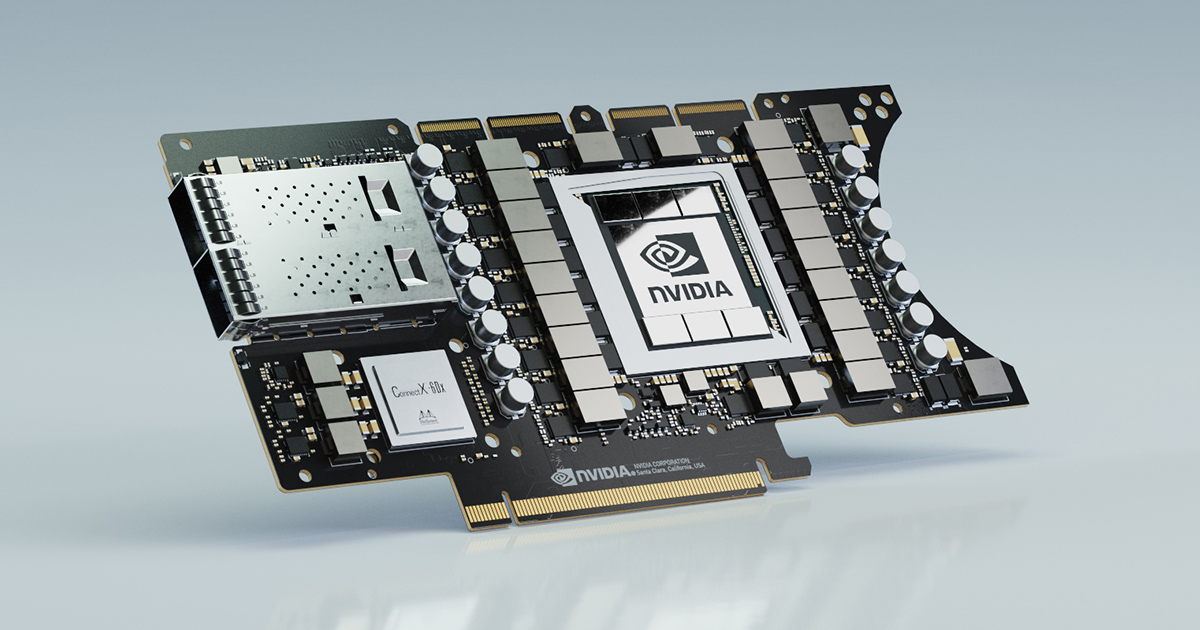

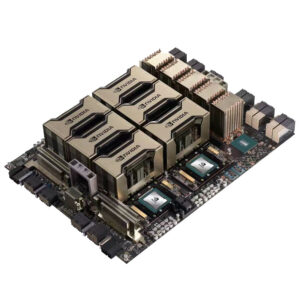

2. NVIDIA EGX A100

The NVIDIA EGX A100 brings powerful AI and HPC capabilities to the edge with Ampere A100 GPUs. Each A100 GPU supports 40 GB or 80 GB HBM2 memory and delivers up to 10 PFLOPS of FP16 performance, making it ideal for real-time AI training, inference, and analytics in data-intensive environments. It integrates PCIe Gen4 and NVLink for high-speed connectivity and can handle demanding edge AI workloads like video analytics, predictive maintenance, and digital twins.

- Ideal For: Enterprise edge deployments in industries like manufacturing, healthcare, and retail.

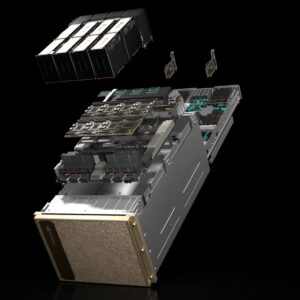

3. NVIDIA EGX H100

The NVIDIA EGX H100 is the most advanced edge AI platform, leveraging NVIDIA’s Hopper architecture to deliver unparalleled performance for next-generation AI workloads. Featuring HBM3 memory and support for FP8 precision, it achieves up to 32 PFLOPS of AI performance per system. The EGX H100 is designed for generative AI, large-scale inference, and real-time analytics at the edge. Its integration of NVSwitch, PCIe Gen5, and liquid cooling ensures optimal performance and energy efficiency.

- Ideal For: Generative AI, advanced inference, and large-scale edge AI deployments.

4. NVIDIA EGX V100

The NVIDIA EGX V100 is a robust AI platform based on NVIDIA’s Volta architecture, offering up to 500 TFLOPS of FP16 performance. Equipped with 16 GB or 32 GB HBM2 memory per GPU, it supports real-time inference and HPC workloads. While it is an earlier-generation platform, it remains a reliable solution for legacy edge AI and data processing applications. With NVLink interconnects, the EGX V100 ensures efficient GPU communication, making it suitable for moderate AI workloads.

- Ideal For: Traditional AI inference, HPC, and data analytics at the edge.

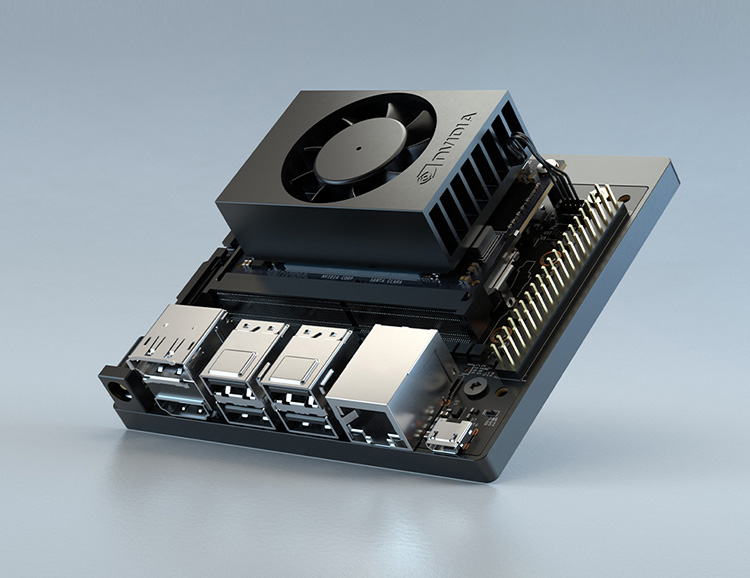

5. NVIDIA EGX Orin Series

The NVIDIA EGX Orin Series is a cutting-edge edge AI platform tailored for smart applications requiring high performance in a compact form factor. Powered by Ampere architecture and Jetson Orin modules, it delivers up to 275 TOPS per module, with multi-module setups offering exceptional scalability. Its energy efficiency (50W–200W per module) and compatibility with real-time data processing make it ideal for applications like smart factories, autonomous vehicles, and retail analytics.

- Ideal For: AI-driven edge applications requiring scalability, power efficiency, and high throughput.

Reviews

There are no reviews yet.