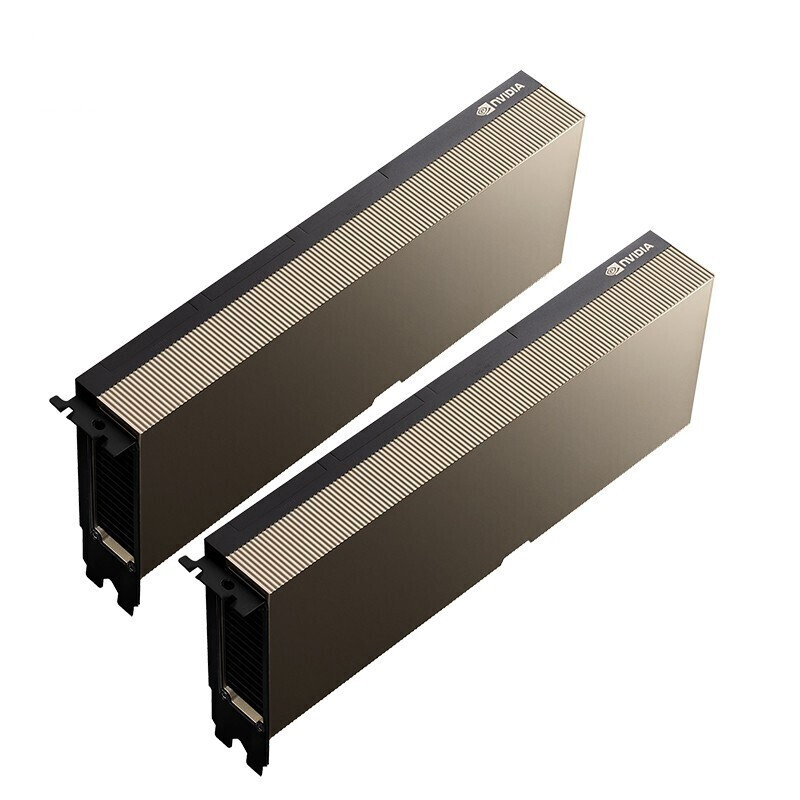

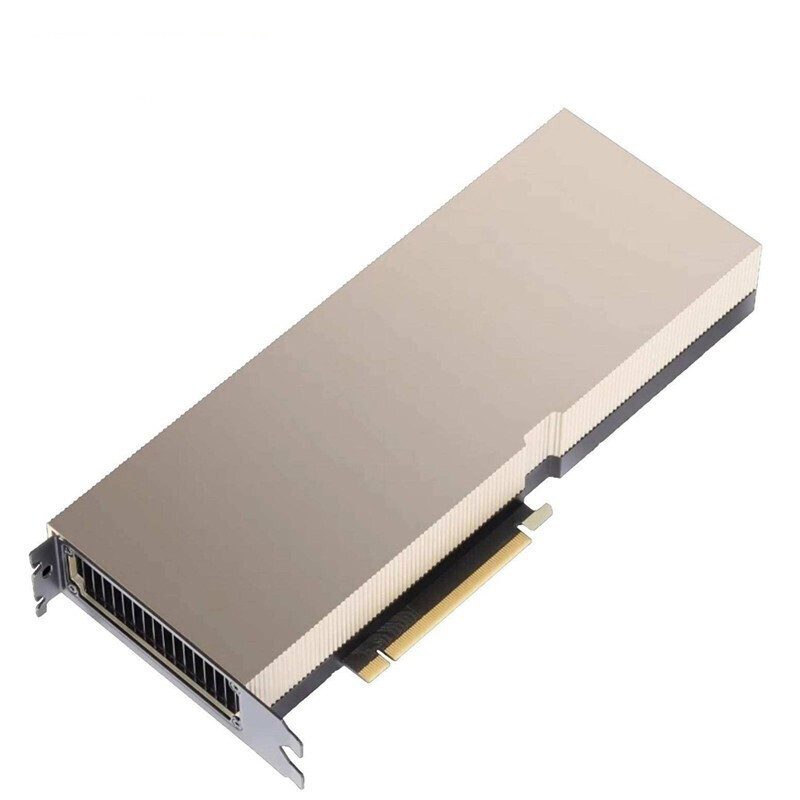

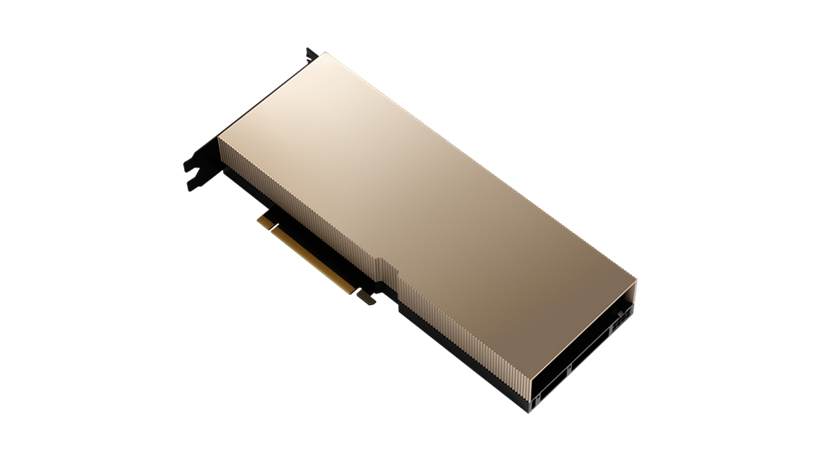

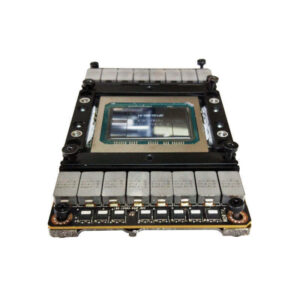

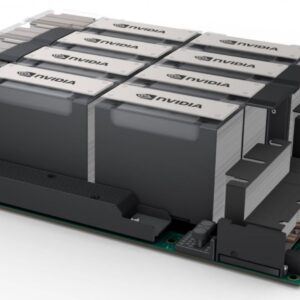

Wholesale Original New NVIDIA Tesla A100 GPU 80GB 40GB A100 PCIE Processor Workstation Computing Graphics Card

Here’s a comparison table for NVIDIA Tesla A100 80GB, NVIDIA Tesla A100 40GB, and NVIDIA Tesla PCIe Graphics Card:

| Feature | NVIDIA Tesla A100 80GB | NVIDIA Tesla A100 40GB | NVIDIA Tesla PCIe Graphics Card |

|---|---|---|---|

| Architecture | NVIDIA Ampere | NVIDIA Ampere | Varies by model (e.g., Kepler, Maxwell, Pascal) |

| Memory Capacity | 80GB HBM2e | 40GB HBM2 | Varies (e.g., 12GB GDDR5 for Tesla P40) |

| Memory Bandwidth | 2.0 TB/s | 1.6 TB/s | Varies (e.g., 346 GB/s for Tesla P40) |

| Form Factor | SXM4 | SXM4 | PCIe (dual-slot for most models) |

| Interface | NVIDIA NVLink (up to 600 GB/s inter-GPU) | NVIDIA NVLink (up to 600 GB/s inter-GPU) | PCIe Gen3 or Gen4 |

| CUDA Cores | 6,912 | 6,912 | Varies (e.g., 3,584 for Tesla P40) |

| Tensor Cores | 432 | 432 | Not available for older models; available in newer ones |

| Power Consumption | 400W | 400W | Varies (e.g., 250W for Tesla P40) |

| Key Features | Multi-Instance GPU (up to 7 instances per GPU), NVLink | Multi-Instance GPU (up to 7 instances per GPU), NVLink | Varies; optimized for compute, deep learning, or visualization |

| Target Workload | AI/ML training, HPC, data analytics | AI/ML training, HPC, data analytics | Varies by model (e.g., deep learning inference for Tesla P40) |

| Peak FP32 Performance | Up to 19.5 TFLOPS | Up to 19.5 TFLOPS | Varies (e.g., 12 TFLOPS for Tesla P40) |

| Peak FP64 Performance | Up to 9.7 TFLOPS | Up to 9.7 TFLOPS | Varies (e.g., 3 TFLOPS for Tesla P40) |

Reviews

There are no reviews yet.