100% Original Wholesale brand new Supermicro NVIDIA HGX™ B200 Systems supermicro server

Here’s a detailed comparison table in the style of Supermicro’s NVIDIA HGX™ B200 systems, comparing the SYS-122HA-TN-LCC, SYS-122H-TN, and SYS-122C-TN:Supermicro 1U Xeon D-1700 Systems Comparison

| Feature | SYS-122HA-TN-LCC | SYS-122H-TN | SYS-122C-TN |

|---|---|---|---|

| Form Factor | 1U Rackmount | 1U Rackmount | 1U Rackmount |

| Processor | Intel® Xeon® D-1700 (Up to 20C/40T) | Intel® Xeon® D-1700 (Up to 20C/40T) | Intel® Xeon® D-1700 (Up to 20C/40T) |

| Memory | Up to 1TB DDR4 ECC RDIMM | Up to 1TB DDR4 ECC RDIMM | Up to 1TB DDR4 ECC RDIMM |

| Storage | 4x 2.5″/3.5″ Hot-swap (SATA/NVMe) | 4x 2.5″/3.5″ Hot-swap (SATA/NVMe) | 4x 2.5″/3.5″ Hot-swap (SATA/NVMe) |

| Expansion Slots | Optional OCP 3.0 (10/25GbE) | Optional OCP 3.0 (10/25GbE) | Optional OCP 3.0 (10/25GbE) |

| Networking | 4x 1GbE (Base) + Optional 10/25GbE | 4x 1GbE (Base) + Optional 10/25GbE | 4x 1GbE (Base) + Optional 10/25GbE |

| Power Supply | Dual 500W (1+1 Redundant) | Dual 500W (1+1 Redundant) | Dual 500W (1+1 Redundant) |

| Cooling | LCC-Optimized (Low-Cost Cooling) | Standard High-Performance Cooling | Standard High-Performance Cooling |

| Use Cases | Hyperscale, Cloud, Energy-Efficient DC | Enterprise, Storage, Virtualization | SMB, Edge, Light Virtualization |

| Special Features | ✔ Optimized for power/thermal efficiency | ✔ Balanced performance & expandability |

Supermicro NVIDIA HGX™ B200 Systems – Product Description

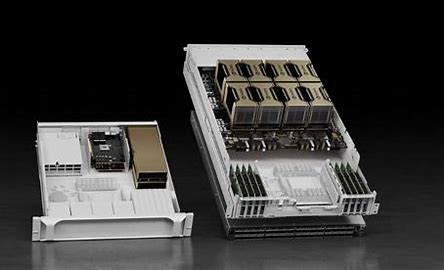

The Supermicro NVIDIA HGX™ B200 Systems are ultra-high-performance AI servers designed for large-scale AI training, inference, and accelerated computing. Built on NVIDIA’s next-generation Blackwell architecture, these systems deliver unprecedented computational power, scalability, and efficiency for enterprise AI, HPC, and cloud workloads.

Key Features & Specifications

1. Unmatched AI Performance

-

Powered by NVIDIA HGX™ B200 GPUs – Featuring next-gen Blackwell GPUs with advanced tensor cores and FP8/FP4 precision for 5x faster AI training and 30x faster inference vs. previous generations.

-

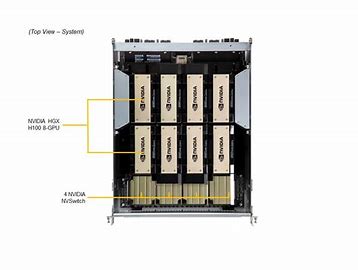

Scalable GPU Configurations – Supports 4x, 8x, or 16x NVIDIA B200 GPUs in a single system, enabling petaflop-scale AI performance.

-

NVLink & NVSwitch – High-speed interconnect technology ensures low-latency, high-bandwidth communication between GPUs.

2. Cutting-Edge Server Platform

-

Supermicro’s Advanced GPU-Optimized Chassis – Designed for maximum thermal efficiency with direct liquid cooling (DLC) or air cooling options.

-

Dual/Quad Intel® or AMD EPYC™ CPUs – Supports the latest multi-core processors for balanced CPU-GPU workloads.

-

High-Speed Memory & Storage –

-

Up to 2TB DDR5 ECC memory per node.

-

NVMe/SAS/SATA storage with hot-swappable bays for rapid data access.

-

3. Enterprise-Grade Networking & Scalability

-

NVIDIA Quantum-2 InfiniBand or Spectrum-X Ethernet – Ensures ultra-low-latency networking for distributed AI/ML clusters.

-

PCIe 5.0 & CXL Support – Future-proof expansion for high-speed accelerators and memory pooling.

4. Optimized for AI & HPC Workloads

-

AI Training & LLMs – Ideal for GPT-4, large language models (LLMs), and generative AI.

-

Scientific Computing – Accelerates HPC simulations, climate modeling, and drug discovery.

-

Cloud & Hyperscale Deployments – Supports multi-node scaling for AI supercomputing clusters.

Available Configurations

| Model | GPU Configuration | CPU Support | Cooling | Use Case |

|---|---|---|---|---|

| Supermicro HGX B200-4 | 4x NVIDIA B200 GPUs | Dual Intel/AMD EPYC | Air/Liquid Cooling | Mid-range AI training |

| Supermicro HGX B200-8 | 8x NVIDIA B200 GPUs | Quad Intel/AMD EPYC | Liquid Cooling (DLC) | Large-scale AI/LLM training |

| Supermicro HGX B200-16 | 16x NVIDIA B200 GPUs | Quad/Octa CPU Nodes | Liquid Cooling (DLC) | Hyperscale AI supercomputing |

Why Choose Supermicro NVIDIA HGX™ B200 Systems?

✔ Industry-Leading AI Performance – Powered by NVIDIA Blackwell architecture for breakthrough AI speed.

✔ Scalable & Energy-Efficient – Supports liquid cooling for dense, high-performance deployments.

✔ Enterprise-Ready – Optimized for data centers, cloud providers, and AI research labs.

Ideal for AI startups, cloud service providers, and enterprises deploying next-generation generative AI and HPC workloads.

Reviews

There are no reviews yet.